网络营销能做什么?seo的中文含义

ssh-copy-id,部署机免密登录其他三台主机

所有机器硬盘配置参考如下,计划采用vdb作为ceph数据盘

下载ceph-deploy

pip install ceph-deploy

免密登录+设置主机名

hostnamectl --static set-hostname ceph-0 .. 3

配置hosts

172.17.163.105 ceph-0

172.17.112.206 ceph-1

172.17.227.100 ceph-2

172.17.67.157 ceph-3

scp /etc/hosts root@ceph-1:/etc/hostsscp /etc/hosts root@ceph-2:/etc/hostsscp /etc/hosts root@ceph-3:/etc/hosts本机先安装各软件包

rpm -ivhU liboath/liboath-*

过滤掉安装不上的包

find rpmbuild/RPMS/ | grep \\.rpm | grep -v debug | grep -v k8s | grep -v mgr\-rook | grep -v mgr\-ssh | xargs -i echo "{} \\"添加用户,安装软件

useradd ceph

yum install -y rpmbuild/RPMS/noarch/ceph-mgr-dashboard-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-mgr-diskprediction-cloud-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-grafana-dashboards-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-mgr-diskprediction-local-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/aarch64/librgw-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librados-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-base-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-mirror-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-ceph-argparse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librbd-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-test-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rados-objclass-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-rbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mds-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-fuse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mgr-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librbd1-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-cephfs-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libcephfs2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-rgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libradospp-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-nbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libcephfs-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mon-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-radosgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-ceph-argparse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-cephfs-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rados-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-fuse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librgw2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-common-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librados2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-ceph-compat-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-osd-14.2.10-0.oe1.bclinux.aarch64.rpm \

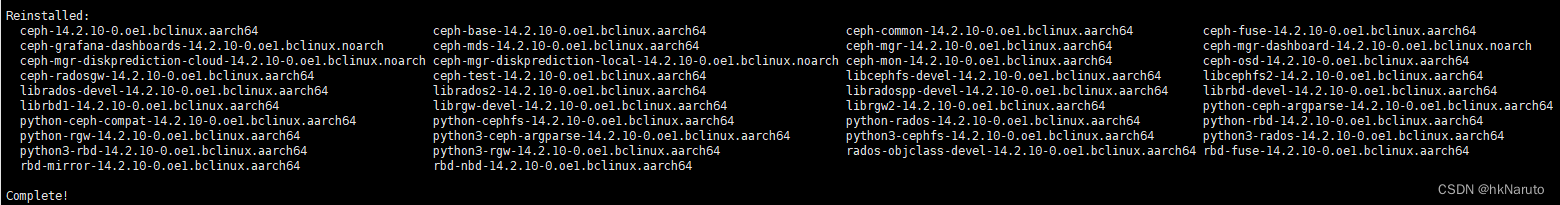

rpmbuild/RPMS/aarch64/python-rados-14.2.10-0.oe1.bclinux.aarch64.rpm安装成功日志(截图是二次reinstall)

注意:如未提前创建用户,警报

分发编译好的rpm包+el8的 liboath

ceph-0

rsync -avr -P liboath root@ceph-1:~/

rsync -avr -P liboath root@ceph-2:~/

rsync -avr -P liboath root@ceph-3:~/rsync -avr -P rpmbuild/RPMS root@ceph-1:~/rpmbuild/

rsync -avr -P rpmbuild/RPMS root@ceph-2:~/rpmbuild/

rsync -avr -P rpmbuild/RPMS root@ceph-3:~/rpmbuild/分别登录ceph-1、ceph-2、ceph-3执行(后续可以考虑ansible封装)

cd ~

rpm -ivhU liboath/liboath-*

useradd ceph

yum install -y rpmbuild/RPMS/noarch/ceph-mgr-dashboard-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-mgr-diskprediction-cloud-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-grafana-dashboards-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/noarch/ceph-mgr-diskprediction-local-14.2.10-0.oe1.bclinux.noarch.rpm \

rpmbuild/RPMS/aarch64/librgw-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librados-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-base-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-mirror-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-ceph-argparse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librbd-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-test-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rados-objclass-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-rbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mds-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-fuse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mgr-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librbd1-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-cephfs-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libcephfs2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-rgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libradospp-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-nbd-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/libcephfs-devel-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-mon-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-radosgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-ceph-argparse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-cephfs-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rados-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/rbd-fuse-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librgw2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python3-rgw-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-common-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/librados2-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/python-ceph-compat-14.2.10-0.oe1.bclinux.aarch64.rpm \

rpmbuild/RPMS/aarch64/ceph-osd-14.2.10-0.oe1.bclinux.aarch64.rpm \

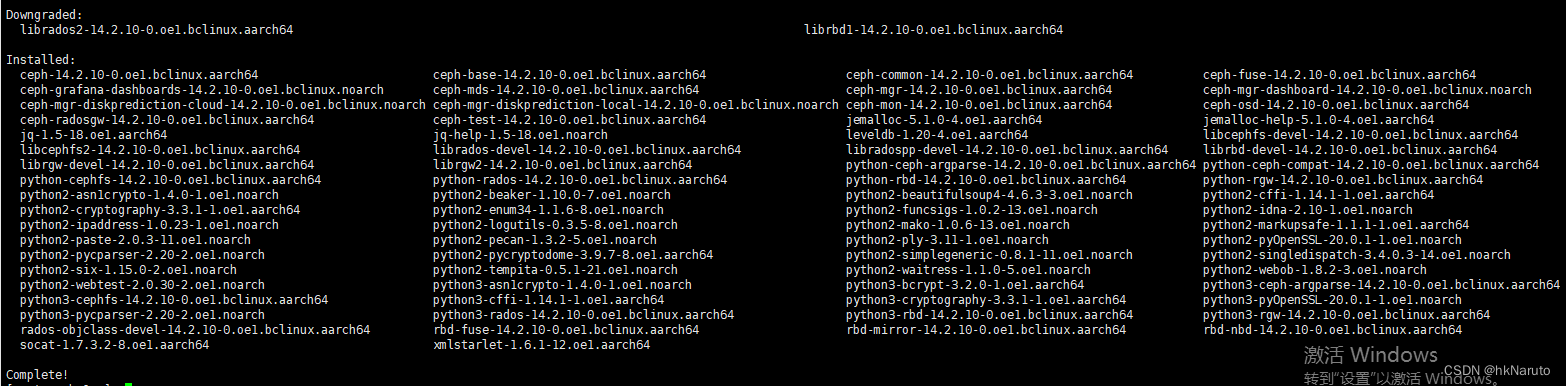

rpmbuild/RPMS/aarch64/python-rados-14.2.10-0.oe1.bclinux.aarch64.rpm安装日志

时间同步ntpdate

ceph-0

yum install ntpdate编辑/etc/ntp.conf

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noepeer noquery

restrict source nomodify notrap noepeer noquery

restrict 127.0.0.1

restrict ::1

tos maxclock 5

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

server asia.pool.ntp.org启动ntpd

systemctl enable ntpd --nowceph-1 ceph-2 ceph-3

yum install -y ntpdate 编辑/etc/ntp.conf

driftfile /var/lib/ntp/drift

restrict default nomodify notrap nopeer noepeer noquery

restrict source nomodify notrap noepeer noquery

restrict 127.0.0.1

restrict ::1

tos maxclock 5

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

server ceph-0启动ntpd

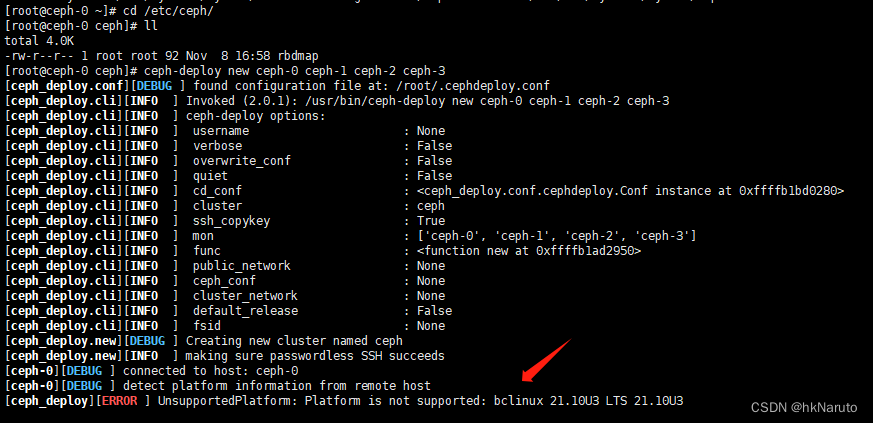

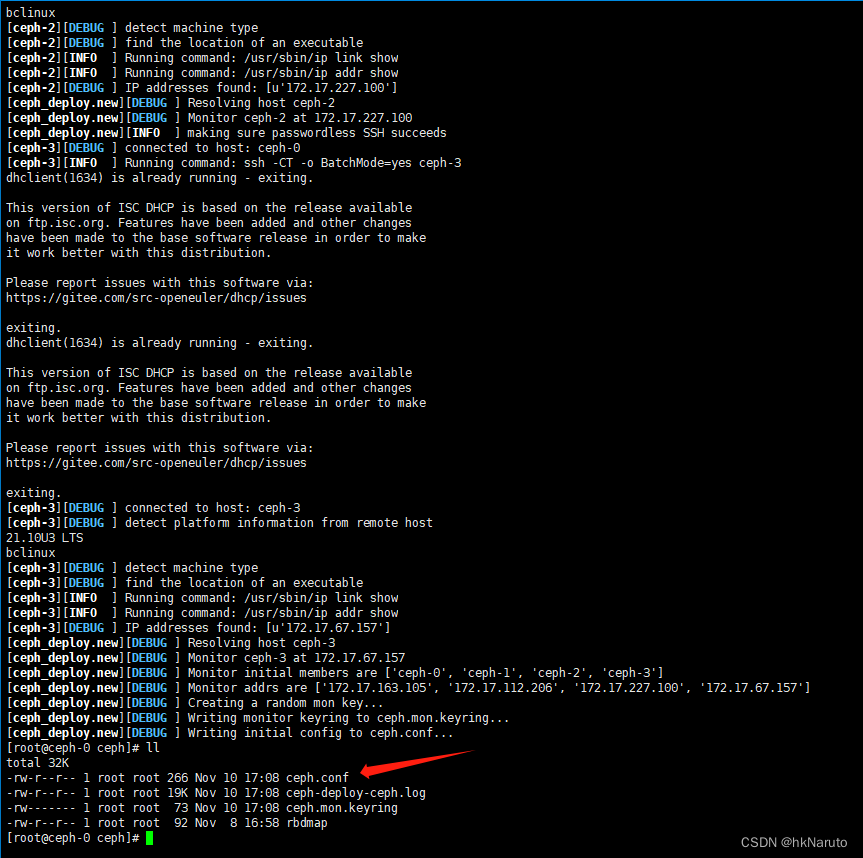

systemctl enable ntpd --now部署mon节点,生成ceph.conf

cd /etc/ceph/

ceph-deploy new ceph-0 ceph-1 ceph-2 ceph-3报错,如下

[ceph_deploy][ERROR ] UnsupportedPlatform: Platform is not supported: bclinux 21.10U3 LTS 21.10U3

修改/usr/lib/python2.7/site-packages/ceph_deploy/calamari.py,新增一个bclinux 差异如下

[root@ceph-0 ceph_deploy]# diff calamari.py calamari.py.bak -Npr

*** calamari.py 2023-11-10 16:56:49.445013228 +0800

--- calamari.py.bak 2023-11-10 16:56:14.793013228 +0800

*************** def distro_is_supported(distro_name):

*** 13,19 ****An enforcer of supported distros that can differ from what ceph-deploysupports."""

! supported = ['centos', 'redhat', 'ubuntu', 'debian', 'bclinux']if distro_name in supported:return Truereturn False

--- 13,19 ----An enforcer of supported distros that can differ from what ceph-deploysupports."""

! supported = ['centos', 'redhat', 'ubuntu', 'debian']if distro_name in supported:return Truereturn False

修改/usr/lib/python2.7/site-packages/ceph_deploy/hosts/__init__.py

[root@ceph-0 ceph_deploy]# diff -Npr hosts/__init__.py hosts/__init__.py.bak

*** hosts/__init__.py 2023-11-10 17:06:27.585013228 +0800

--- hosts/__init__.py.bak 2023-11-10 17:05:48.697013228 +0800

*************** def _get_distro(distro, fallback=None, u

*** 101,107 ****'fedora': fedora,'suse': suse,'virtuozzo': centos,

- 'bclinux': centos,'arch': arch}--- 101,106 ----ceph-deploy new ceph-0 ceph-1 ceph-2 ceph-3

成功生成ceph.conf,过程日志

[root@ceph-0 ceph]# ceph-deploy new ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffffb246c280>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph-0', 'ceph-1', 'ceph-2', 'ceph-3']

[ceph_deploy.cli][INFO ] func : <function new at 0xffffb236e9d0>

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-0][DEBUG ] connected to host: ceph-0

[ceph-0][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-0][DEBUG ] detect machine type

[ceph-0][DEBUG ] find the location of an executable

[ceph-0][INFO ] Running command: /usr/sbin/ip link show

[ceph-0][INFO ] Running command: /usr/sbin/ip addr show

[ceph-0][DEBUG ] IP addresses found: [u'172.18.0.1', u'172.17.163.105']

[ceph_deploy.new][DEBUG ] Resolving host ceph-0

[ceph_deploy.new][DEBUG ] Monitor ceph-0 at 172.17.163.105

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-1][DEBUG ] connected to host: ceph-0

[ceph-1][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-1

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] find the location of an executable

[ceph-1][INFO ] Running command: /usr/sbin/ip link show

[ceph-1][INFO ] Running command: /usr/sbin/ip addr show

[ceph-1][DEBUG ] IP addresses found: [u'172.17.112.206']

[ceph_deploy.new][DEBUG ] Resolving host ceph-1

[ceph_deploy.new][DEBUG ] Monitor ceph-1 at 172.17.112.206

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-2][DEBUG ] connected to host: ceph-0

[ceph-2][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-2

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] find the location of an executable

[ceph-2][INFO ] Running command: /usr/sbin/ip link show

[ceph-2][INFO ] Running command: /usr/sbin/ip addr show

[ceph-2][DEBUG ] IP addresses found: [u'172.17.227.100']

[ceph_deploy.new][DEBUG ] Resolving host ceph-2

[ceph_deploy.new][DEBUG ] Monitor ceph-2 at 172.17.227.100

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-3][DEBUG ] connected to host: ceph-0

[ceph-3][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-3

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-3][DEBUG ] detect machine type

[ceph-3][DEBUG ] find the location of an executable

[ceph-3][INFO ] Running command: /usr/sbin/ip link show

[ceph-3][INFO ] Running command: /usr/sbin/ip addr show

[ceph-3][DEBUG ] IP addresses found: [u'172.17.67.157']

[ceph_deploy.new][DEBUG ] Resolving host ceph-3

[ceph_deploy.new][DEBUG ] Monitor ceph-3 at 172.17.67.157

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-0', 'ceph-1', 'ceph-2', 'ceph-3']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['172.17.163.105', '172.17.112.206', '172.17.227.100', '172.17.67.157']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

自动生成的/etc/ceph/ceph.conf内容如下

[global]

fsid = ff72b496-d036-4f1b-b2ad-55358f3c16cb

mon_initial_members = ceph-0, ceph-1, ceph-2, ceph-3

mon_host = 172.17.163.105,172.17.112.206,172.17.227.100,172.17.67.157

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

由于只是测试环境,且未挂第二个网络,暂时不设置public_network参数

部署monitor

cd /etc/ceph

ceph-deploy mon create ceph-0 ceph-1 ceph-2 ceph-3故障

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph-3][ERROR ] Traceback (most recent call last):

[ceph-3][ERROR ] File "/bin/ceph", line 151, in <module>

[ceph-3][ERROR ] from ceph_daemon import admin_socket, DaemonWatcher, Termsize

[ceph-3][ERROR ] File "/usr/lib/python2.7/site-packages/ceph_daemon.py", line 24, in <module>

[ceph-3][ERROR ] from prettytable import PrettyTable, HEADER

[ceph-3][ERROR ] ImportError: No module named prettytable

[ceph-3][WARNIN] monitor: mon.ceph-3, might not be running yet

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph-3][ERROR ] Traceback (most recent call last):

[ceph-3][ERROR ] File "/bin/ceph", line 151, in <module>

[ceph-3][ERROR ] from ceph_daemon import admin_socket, DaemonWatcher, Termsize

[ceph-3][ERROR ] File "/usr/lib/python2.7/site-packages/ceph_daemon.py", line 24, in <module>

[ceph-3][ERROR ] from prettytable import PrettyTable, HEADER

[ceph-3][ERROR ] ImportError: No module named prettytable

[ceph-3][WARNIN] monitor ceph-3 does not exist in monmap

[ceph-3][WARNIN] neither `public_addr` nor `public_network` keys are defined for monitors

[ceph-3][WARNIN] monitors may not be able to form quorum

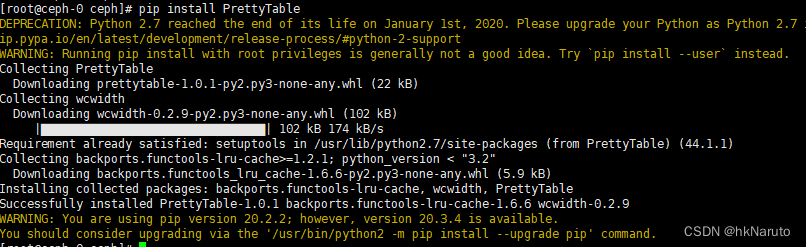

No module named prettytable

pip install PrettyTable

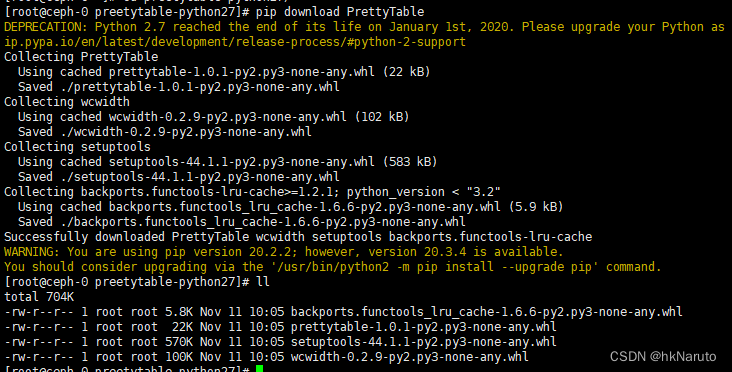

下载离线包

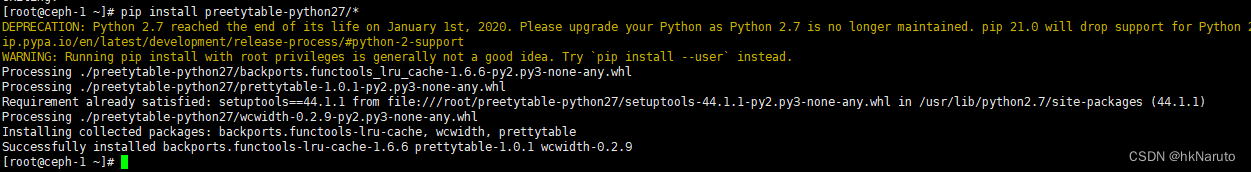

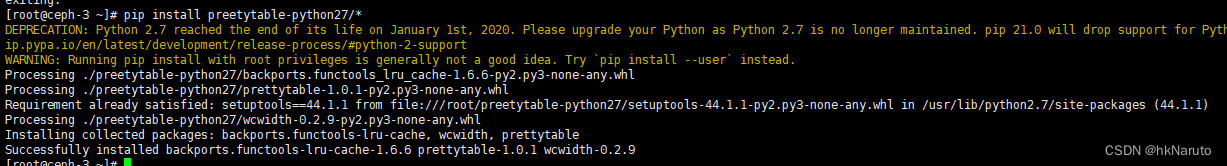

分发到其他三台机器上离线安装

rsync -avr -P preetytable-python27 root@ceph-1:~/

rsync -avr -P preetytable-python27 root@ceph-2:~/

rsync -avr -P preetytable-python27 root@ceph-3:~/

再次部署monitor

cd /etc/ceph

ceph-deploy mon create ceph-0 ceph-1 ceph-2 ceph-3日志记录

[root@ceph-0 ~]# cd /etc/ceph

[root@ceph-0 ceph]# ceph-deploy mon create ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon create ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffff992fb320>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['ceph-0', 'ceph-1', 'ceph-2', 'ceph-3']

[ceph_deploy.cli][INFO ] func : <function mon at 0xffff993967d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-0 ...

[ceph-0][DEBUG ] connected to host: ceph-0

[ceph-0][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-0][DEBUG ] detect machine type

[ceph-0][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: bclinux 21.10U3 21.10U3 LTS

[ceph-0][DEBUG ] determining if provided host has same hostname in remote

[ceph-0][DEBUG ] get remote short hostname

[ceph-0][DEBUG ] deploying mon to ceph-0

[ceph-0][DEBUG ] get remote short hostname

[ceph-0][DEBUG ] remote hostname: ceph-0

[ceph-0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-0][DEBUG ] create the mon path if it does not exist

[ceph-0][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-0/done

[ceph-0][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-0][DEBUG ] create the init path if it does not exist

[ceph-0][INFO ] Running command: systemctl enable ceph.target

[ceph-0][INFO ] Running command: systemctl enable ceph-mon@ceph-0

[ceph-0][INFO ] Running command: systemctl start ceph-mon@ceph-0

[ceph-0][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-0.asok mon_status

[ceph-0][DEBUG ] ********************************************************************************

[ceph-0][DEBUG ] status for monitor: mon.ceph-0

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "election_epoch": 8,

[ceph-0][DEBUG ] "extra_probe_peers": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ],

[ceph-0][DEBUG ] "feature_map": {

[ceph-0][DEBUG ] "mon": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "features": "0x3ffddff8ffacffff",

[ceph-0][DEBUG ] "num": 1,

[ceph-0][DEBUG ] "release": "luminous"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "features": {

[ceph-0][DEBUG ] "quorum_con": "4611087854031667199",

[ceph-0][DEBUG ] "quorum_mon": [

[ceph-0][DEBUG ] "kraken",

[ceph-0][DEBUG ] "luminous",

[ceph-0][DEBUG ] "mimic",

[ceph-0][DEBUG ] "osdmap-prune",

[ceph-0][DEBUG ] "nautilus"

[ceph-0][DEBUG ] ],

[ceph-0][DEBUG ] "required_con": "2449958747315912708",

[ceph-0][DEBUG ] "required_mon": [

[ceph-0][DEBUG ] "kraken",

[ceph-0][DEBUG ] "luminous",

[ceph-0][DEBUG ] "mimic",

[ceph-0][DEBUG ] "osdmap-prune",

[ceph-0][DEBUG ] "nautilus"

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "monmap": {

[ceph-0][DEBUG ] "created": "2023-11-11 09:54:05.372287",

[ceph-0][DEBUG ] "epoch": 1,

[ceph-0][DEBUG ] "features": {

[ceph-0][DEBUG ] "optional": [],

[ceph-0][DEBUG ] "persistent": [

[ceph-0][DEBUG ] "kraken",

[ceph-0][DEBUG ] "luminous",

[ceph-0][DEBUG ] "mimic",

[ceph-0][DEBUG ] "osdmap-prune",

[ceph-0][DEBUG ] "nautilus"

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "fsid": "ff72b496-d036-4f1b-b2ad-55358f3c16cb",

[ceph-0][DEBUG ] "min_mon_release": 14,

[ceph-0][DEBUG ] "min_mon_release_name": "nautilus",

[ceph-0][DEBUG ] "modified": "2023-11-11 09:54:05.372287",

[ceph-0][DEBUG ] "mons": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.67.157:6789/0",

[ceph-0][DEBUG ] "name": "ceph-3",

[ceph-0][DEBUG ] "public_addr": "172.17.67.157:6789/0",

[ceph-0][DEBUG ] "public_addrs": {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "rank": 0

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.112.206:6789/0",

[ceph-0][DEBUG ] "name": "ceph-1",

[ceph-0][DEBUG ] "public_addr": "172.17.112.206:6789/0",

[ceph-0][DEBUG ] "public_addrs": {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "rank": 1

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.163.105:6789/0",

[ceph-0][DEBUG ] "name": "ceph-0",

[ceph-0][DEBUG ] "public_addr": "172.17.163.105:6789/0",

[ceph-0][DEBUG ] "public_addrs": {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "rank": 2

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.227.100:6789/0",

[ceph-0][DEBUG ] "name": "ceph-2",

[ceph-0][DEBUG ] "public_addr": "172.17.227.100:6789/0",

[ceph-0][DEBUG ] "public_addrs": {

[ceph-0][DEBUG ] "addrvec": [

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v2"

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] {

[ceph-0][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-0][DEBUG ] "nonce": 0,

[ceph-0][DEBUG ] "type": "v1"

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "rank": 3

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ]

[ceph-0][DEBUG ] },

[ceph-0][DEBUG ] "name": "ceph-0",

[ceph-0][DEBUG ] "outside_quorum": [],

[ceph-0][DEBUG ] "quorum": [

[ceph-0][DEBUG ] 0,

[ceph-0][DEBUG ] 1,

[ceph-0][DEBUG ] 2,

[ceph-0][DEBUG ] 3

[ceph-0][DEBUG ] ],

[ceph-0][DEBUG ] "quorum_age": 917,

[ceph-0][DEBUG ] "rank": 2,

[ceph-0][DEBUG ] "state": "peon",

[ceph-0][DEBUG ] "sync_provider": []

[ceph-0][DEBUG ] }

[ceph-0][DEBUG ] ********************************************************************************

[ceph-0][INFO ] monitor: mon.ceph-0 is running

[ceph-0][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-0.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-1 ...

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: bclinux 21.10U3 21.10U3 LTS

[ceph-1][DEBUG ] determining if provided host has same hostname in remote

[ceph-1][DEBUG ] get remote short hostname

[ceph-1][DEBUG ] deploying mon to ceph-1

[ceph-1][DEBUG ] get remote short hostname

[ceph-1][DEBUG ] remote hostname: ceph-1

[ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-1][DEBUG ] create the mon path if it does not exist

[ceph-1][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-1/done

[ceph-1][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-1][DEBUG ] create the init path if it does not exist

[ceph-1][INFO ] Running command: systemctl enable ceph.target

[ceph-1][INFO ] Running command: systemctl enable ceph-mon@ceph-1

[ceph-1][INFO ] Running command: systemctl start ceph-mon@ceph-1

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph-1][DEBUG ] ********************************************************************************

[ceph-1][DEBUG ] status for monitor: mon.ceph-1

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "election_epoch": 8,

[ceph-1][DEBUG ] "extra_probe_peers": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ],

[ceph-1][DEBUG ] "feature_map": {

[ceph-1][DEBUG ] "mon": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "features": "0x3ffddff8ffacffff",

[ceph-1][DEBUG ] "num": 1,

[ceph-1][DEBUG ] "release": "luminous"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "features": {

[ceph-1][DEBUG ] "quorum_con": "4611087854031667199",

[ceph-1][DEBUG ] "quorum_mon": [

[ceph-1][DEBUG ] "kraken",

[ceph-1][DEBUG ] "luminous",

[ceph-1][DEBUG ] "mimic",

[ceph-1][DEBUG ] "osdmap-prune",

[ceph-1][DEBUG ] "nautilus"

[ceph-1][DEBUG ] ],

[ceph-1][DEBUG ] "required_con": "2449958747315912708",

[ceph-1][DEBUG ] "required_mon": [

[ceph-1][DEBUG ] "kraken",

[ceph-1][DEBUG ] "luminous",

[ceph-1][DEBUG ] "mimic",

[ceph-1][DEBUG ] "osdmap-prune",

[ceph-1][DEBUG ] "nautilus"

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "monmap": {

[ceph-1][DEBUG ] "created": "2023-11-11 09:54:05.372287",

[ceph-1][DEBUG ] "epoch": 1,

[ceph-1][DEBUG ] "features": {

[ceph-1][DEBUG ] "optional": [],

[ceph-1][DEBUG ] "persistent": [

[ceph-1][DEBUG ] "kraken",

[ceph-1][DEBUG ] "luminous",

[ceph-1][DEBUG ] "mimic",

[ceph-1][DEBUG ] "osdmap-prune",

[ceph-1][DEBUG ] "nautilus"

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "fsid": "ff72b496-d036-4f1b-b2ad-55358f3c16cb",

[ceph-1][DEBUG ] "min_mon_release": 14,

[ceph-1][DEBUG ] "min_mon_release_name": "nautilus",

[ceph-1][DEBUG ] "modified": "2023-11-11 09:54:05.372287",

[ceph-1][DEBUG ] "mons": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.67.157:6789/0",

[ceph-1][DEBUG ] "name": "ceph-3",

[ceph-1][DEBUG ] "public_addr": "172.17.67.157:6789/0",

[ceph-1][DEBUG ] "public_addrs": {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "rank": 0

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.112.206:6789/0",

[ceph-1][DEBUG ] "name": "ceph-1",

[ceph-1][DEBUG ] "public_addr": "172.17.112.206:6789/0",

[ceph-1][DEBUG ] "public_addrs": {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "rank": 1

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.163.105:6789/0",

[ceph-1][DEBUG ] "name": "ceph-0",

[ceph-1][DEBUG ] "public_addr": "172.17.163.105:6789/0",

[ceph-1][DEBUG ] "public_addrs": {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "rank": 2

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.227.100:6789/0",

[ceph-1][DEBUG ] "name": "ceph-2",

[ceph-1][DEBUG ] "public_addr": "172.17.227.100:6789/0",

[ceph-1][DEBUG ] "public_addrs": {

[ceph-1][DEBUG ] "addrvec": [

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v2"

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] {

[ceph-1][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-1][DEBUG ] "nonce": 0,

[ceph-1][DEBUG ] "type": "v1"

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "rank": 3

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ]

[ceph-1][DEBUG ] },

[ceph-1][DEBUG ] "name": "ceph-1",

[ceph-1][DEBUG ] "outside_quorum": [],

[ceph-1][DEBUG ] "quorum": [

[ceph-1][DEBUG ] 0,

[ceph-1][DEBUG ] 1,

[ceph-1][DEBUG ] 2,

[ceph-1][DEBUG ] 3

[ceph-1][DEBUG ] ],

[ceph-1][DEBUG ] "quorum_age": 921,

[ceph-1][DEBUG ] "rank": 1,

[ceph-1][DEBUG ] "state": "peon",

[ceph-1][DEBUG ] "sync_provider": []

[ceph-1][DEBUG ] }

[ceph-1][DEBUG ] ********************************************************************************

[ceph-1][INFO ] monitor: mon.ceph-1 is running

[ceph-1][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-1.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-2 ...

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: bclinux 21.10U3 21.10U3 LTS

[ceph-2][DEBUG ] determining if provided host has same hostname in remote

[ceph-2][DEBUG ] get remote short hostname

[ceph-2][DEBUG ] deploying mon to ceph-2

[ceph-2][DEBUG ] get remote short hostname

[ceph-2][DEBUG ] remote hostname: ceph-2

[ceph-2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-2][DEBUG ] create the mon path if it does not exist

[ceph-2][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-2/done

[ceph-2][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-2][DEBUG ] create the init path if it does not exist

[ceph-2][INFO ] Running command: systemctl enable ceph.target

[ceph-2][INFO ] Running command: systemctl enable ceph-mon@ceph-2

[ceph-2][INFO ] Running command: systemctl start ceph-mon@ceph-2

[ceph-2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-2.asok mon_status

[ceph-2][DEBUG ] ********************************************************************************

[ceph-2][DEBUG ] status for monitor: mon.ceph-2

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "election_epoch": 8,

[ceph-2][DEBUG ] "extra_probe_peers": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ],

[ceph-2][DEBUG ] "feature_map": {

[ceph-2][DEBUG ] "mon": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "features": "0x3ffddff8ffacffff",

[ceph-2][DEBUG ] "num": 1,

[ceph-2][DEBUG ] "release": "luminous"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "features": {

[ceph-2][DEBUG ] "quorum_con": "4611087854031667199",

[ceph-2][DEBUG ] "quorum_mon": [

[ceph-2][DEBUG ] "kraken",

[ceph-2][DEBUG ] "luminous",

[ceph-2][DEBUG ] "mimic",

[ceph-2][DEBUG ] "osdmap-prune",

[ceph-2][DEBUG ] "nautilus"

[ceph-2][DEBUG ] ],

[ceph-2][DEBUG ] "required_con": "2449958747315912708",

[ceph-2][DEBUG ] "required_mon": [

[ceph-2][DEBUG ] "kraken",

[ceph-2][DEBUG ] "luminous",

[ceph-2][DEBUG ] "mimic",

[ceph-2][DEBUG ] "osdmap-prune",

[ceph-2][DEBUG ] "nautilus"

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "monmap": {

[ceph-2][DEBUG ] "created": "2023-11-11 09:54:05.372287",

[ceph-2][DEBUG ] "epoch": 1,

[ceph-2][DEBUG ] "features": {

[ceph-2][DEBUG ] "optional": [],

[ceph-2][DEBUG ] "persistent": [

[ceph-2][DEBUG ] "kraken",

[ceph-2][DEBUG ] "luminous",

[ceph-2][DEBUG ] "mimic",

[ceph-2][DEBUG ] "osdmap-prune",

[ceph-2][DEBUG ] "nautilus"

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "fsid": "ff72b496-d036-4f1b-b2ad-55358f3c16cb",

[ceph-2][DEBUG ] "min_mon_release": 14,

[ceph-2][DEBUG ] "min_mon_release_name": "nautilus",

[ceph-2][DEBUG ] "modified": "2023-11-11 09:54:05.372287",

[ceph-2][DEBUG ] "mons": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.67.157:6789/0",

[ceph-2][DEBUG ] "name": "ceph-3",

[ceph-2][DEBUG ] "public_addr": "172.17.67.157:6789/0",

[ceph-2][DEBUG ] "public_addrs": {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "rank": 0

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.112.206:6789/0",

[ceph-2][DEBUG ] "name": "ceph-1",

[ceph-2][DEBUG ] "public_addr": "172.17.112.206:6789/0",

[ceph-2][DEBUG ] "public_addrs": {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "rank": 1

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.163.105:6789/0",

[ceph-2][DEBUG ] "name": "ceph-0",

[ceph-2][DEBUG ] "public_addr": "172.17.163.105:6789/0",

[ceph-2][DEBUG ] "public_addrs": {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "rank": 2

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.227.100:6789/0",

[ceph-2][DEBUG ] "name": "ceph-2",

[ceph-2][DEBUG ] "public_addr": "172.17.227.100:6789/0",

[ceph-2][DEBUG ] "public_addrs": {

[ceph-2][DEBUG ] "addrvec": [

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v2"

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] {

[ceph-2][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-2][DEBUG ] "nonce": 0,

[ceph-2][DEBUG ] "type": "v1"

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "rank": 3

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ]

[ceph-2][DEBUG ] },

[ceph-2][DEBUG ] "name": "ceph-2",

[ceph-2][DEBUG ] "outside_quorum": [],

[ceph-2][DEBUG ] "quorum": [

[ceph-2][DEBUG ] 0,

[ceph-2][DEBUG ] 1,

[ceph-2][DEBUG ] 2,

[ceph-2][DEBUG ] 3

[ceph-2][DEBUG ] ],

[ceph-2][DEBUG ] "quorum_age": 926,

[ceph-2][DEBUG ] "rank": 3,

[ceph-2][DEBUG ] "state": "peon",

[ceph-2][DEBUG ] "sync_provider": []

[ceph-2][DEBUG ] }

[ceph-2][DEBUG ] ********************************************************************************

[ceph-2][INFO ] monitor: mon.ceph-2 is running

[ceph-2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-2.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-3 ...

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-3][DEBUG ] detect machine type

[ceph-3][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: bclinux 21.10U3 21.10U3 LTS

[ceph-3][DEBUG ] determining if provided host has same hostname in remote

[ceph-3][DEBUG ] get remote short hostname

[ceph-3][DEBUG ] deploying mon to ceph-3

[ceph-3][DEBUG ] get remote short hostname

[ceph-3][DEBUG ] remote hostname: ceph-3

[ceph-3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-3][DEBUG ] create the mon path if it does not exist

[ceph-3][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph-3/done

[ceph-3][DEBUG ] create a done file to avoid re-doing the mon deployment

[ceph-3][DEBUG ] create the init path if it does not exist

[ceph-3][INFO ] Running command: systemctl enable ceph.target

[ceph-3][INFO ] Running command: systemctl enable ceph-mon@ceph-3

[ceph-3][INFO ] Running command: systemctl start ceph-mon@ceph-3

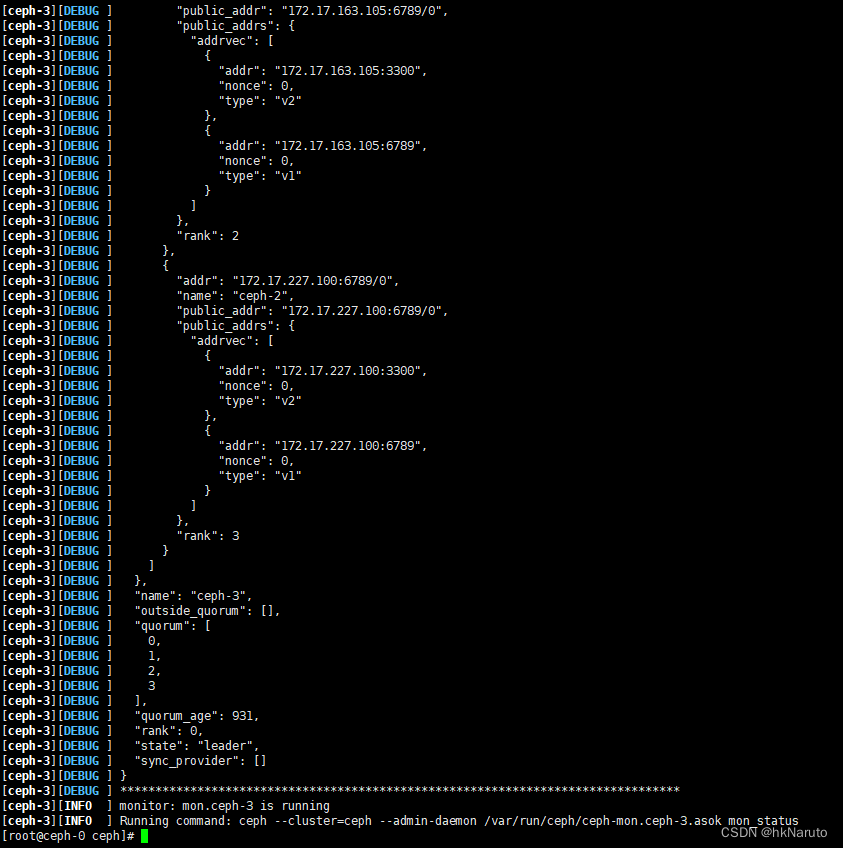

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status

[ceph-3][DEBUG ] ********************************************************************************

[ceph-3][DEBUG ] status for monitor: mon.ceph-3

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "election_epoch": 8,

[ceph-3][DEBUG ] "extra_probe_peers": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ],

[ceph-3][DEBUG ] "feature_map": {

[ceph-3][DEBUG ] "mon": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "features": "0x3ffddff8ffacffff",

[ceph-3][DEBUG ] "num": 1,

[ceph-3][DEBUG ] "release": "luminous"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "features": {

[ceph-3][DEBUG ] "quorum_con": "4611087854031667199",

[ceph-3][DEBUG ] "quorum_mon": [

[ceph-3][DEBUG ] "kraken",

[ceph-3][DEBUG ] "luminous",

[ceph-3][DEBUG ] "mimic",

[ceph-3][DEBUG ] "osdmap-prune",

[ceph-3][DEBUG ] "nautilus"

[ceph-3][DEBUG ] ],

[ceph-3][DEBUG ] "required_con": "2449958747315912708",

[ceph-3][DEBUG ] "required_mon": [

[ceph-3][DEBUG ] "kraken",

[ceph-3][DEBUG ] "luminous",

[ceph-3][DEBUG ] "mimic",

[ceph-3][DEBUG ] "osdmap-prune",

[ceph-3][DEBUG ] "nautilus"

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "monmap": {

[ceph-3][DEBUG ] "created": "2023-11-11 09:54:05.372287",

[ceph-3][DEBUG ] "epoch": 1,

[ceph-3][DEBUG ] "features": {

[ceph-3][DEBUG ] "optional": [],

[ceph-3][DEBUG ] "persistent": [

[ceph-3][DEBUG ] "kraken",

[ceph-3][DEBUG ] "luminous",

[ceph-3][DEBUG ] "mimic",

[ceph-3][DEBUG ] "osdmap-prune",

[ceph-3][DEBUG ] "nautilus"

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "fsid": "ff72b496-d036-4f1b-b2ad-55358f3c16cb",

[ceph-3][DEBUG ] "min_mon_release": 14,

[ceph-3][DEBUG ] "min_mon_release_name": "nautilus",

[ceph-3][DEBUG ] "modified": "2023-11-11 09:54:05.372287",

[ceph-3][DEBUG ] "mons": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.67.157:6789/0",

[ceph-3][DEBUG ] "name": "ceph-3",

[ceph-3][DEBUG ] "public_addr": "172.17.67.157:6789/0",

[ceph-3][DEBUG ] "public_addrs": {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.67.157:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.67.157:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "rank": 0

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.112.206:6789/0",

[ceph-3][DEBUG ] "name": "ceph-1",

[ceph-3][DEBUG ] "public_addr": "172.17.112.206:6789/0",

[ceph-3][DEBUG ] "public_addrs": {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.112.206:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.112.206:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "rank": 1

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.163.105:6789/0",

[ceph-3][DEBUG ] "name": "ceph-0",

[ceph-3][DEBUG ] "public_addr": "172.17.163.105:6789/0",

[ceph-3][DEBUG ] "public_addrs": {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.163.105:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.163.105:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "rank": 2

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.227.100:6789/0",

[ceph-3][DEBUG ] "name": "ceph-2",

[ceph-3][DEBUG ] "public_addr": "172.17.227.100:6789/0",

[ceph-3][DEBUG ] "public_addrs": {

[ceph-3][DEBUG ] "addrvec": [

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.227.100:3300",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v2"

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] {

[ceph-3][DEBUG ] "addr": "172.17.227.100:6789",

[ceph-3][DEBUG ] "nonce": 0,

[ceph-3][DEBUG ] "type": "v1"

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "rank": 3

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ]

[ceph-3][DEBUG ] },

[ceph-3][DEBUG ] "name": "ceph-3",

[ceph-3][DEBUG ] "outside_quorum": [],

[ceph-3][DEBUG ] "quorum": [

[ceph-3][DEBUG ] 0,

[ceph-3][DEBUG ] 1,

[ceph-3][DEBUG ] 2,

[ceph-3][DEBUG ] 3

[ceph-3][DEBUG ] ],

[ceph-3][DEBUG ] "quorum_age": 931,

[ceph-3][DEBUG ] "rank": 0,

[ceph-3][DEBUG ] "state": "leader",

[ceph-3][DEBUG ] "sync_provider": []

[ceph-3][DEBUG ] }

[ceph-3][DEBUG ] ********************************************************************************

[ceph-3][INFO ] monitor: mon.ceph-3 is running

[ceph-3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-3.asok mon_status[errno 2] error connecting to the cluster

没有key?继续下个步骤再观察

收集秘钥

ceph-deploy gatherkeys ceph-0 ceph-1 ceph-2 ceph-3日志

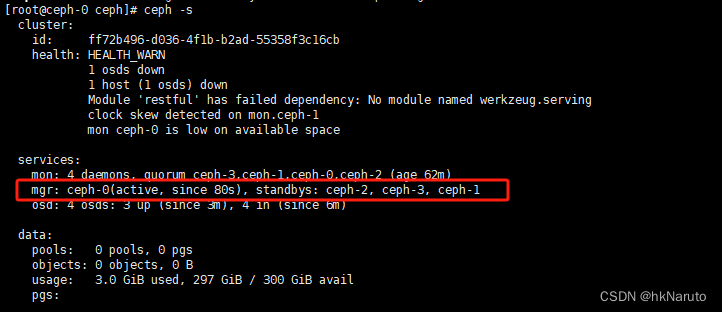

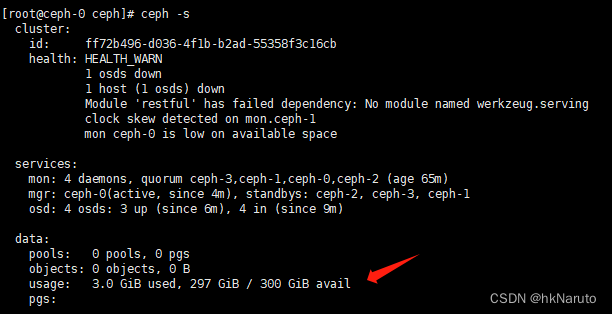

ceph -s 可以看到daemons服务了

部署admin节点

ceph-deploy admin ceph-0 ceph-1 ceph-2 ceph-3[root@ceph-0 ceph]# ceph-deploy admin ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin ceph-0 ceph-1 ceph-2 ceph-3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffff91add0f0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-0', 'ceph-1', 'ceph-2', 'ceph-3']

[ceph_deploy.cli][INFO ] func : <function admin at 0xffff91c777d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-0

[ceph-0][DEBUG ] connected to host: ceph-0

[ceph-0][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-0][DEBUG ] detect machine type

[ceph-0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-1

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-2

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-3

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1634) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-3][DEBUG ] connected to host: ceph-3

[ceph-3][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-3][DEBUG ] detect machine type

[ceph-3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

ceph -s

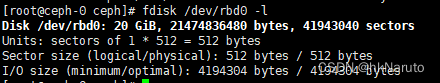

部署OSD

ceph-deploy osd create ceph-0 --data /dev/vdb

ceph-deploy osd create ceph-1 --data /dev/vdb

ceph-deploy osd create ceph-2 --data /dev/vdb

ceph-deploy osd create ceph-3 --data /dev/vdb日志ceph 0 1 2 3

[root@ceph-0 ceph]# ceph-deploy osd create ceph-0 --data /dev/vdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-0 --data /dev/vdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffff9cc8cd20>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-0

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0xffff9cd1bed0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdb

[ceph-0][DEBUG ] connected to host: ceph-0

[ceph-0][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-0][DEBUG ] detect machine type

[ceph-0][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: bclinux 21.10U3 21.10U3 LTS

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-0

[ceph-0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-0][WARNIN] osd keyring does not exist yet, creating one

[ceph-0][DEBUG ] create a keyring file

[ceph-0][DEBUG ] find the location of an executable

[ceph-0][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdb

[ceph-0][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-0][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new c1870346-8e19-4788-b1dd-19bd75d6ec2f

[ceph-0][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-837353d8-91ff-4418-bc8f-a655d94049d4 /dev/vdb

[ceph-0][WARNIN] stdout: Physical volume "/dev/vdb" successfully created.

[ceph-0][WARNIN] stdout: Volume group "ceph-837353d8-91ff-4418-bc8f-a655d94049d4" successfully created

[ceph-0][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 25599 -n osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f ceph-837353d8-91ff-4418-bc8f-a655d94049d4

[ceph-0][WARNIN] stdout: Logical volume "osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f" created.

[ceph-0][WARNIN] Running command: /usr/bin/ceph-authtool --gen-print-key

[ceph-0][WARNIN] Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0

[ceph-0][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-837353d8-91ff-4418-bc8f-a655d94049d4/osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3

[ceph-0][WARNIN] Running command: /usr/bin/ln -s /dev/ceph-837353d8-91ff-4418-bc8f-a655d94049d4/osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f /var/lib/ceph/osd/ceph-0/block

[ceph-0][WARNIN] Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap

[ceph-0][WARNIN] stderr: 2023-11-11 10:48:34.800 ffff843261e0 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

[ceph-0][WARNIN] 2023-11-11 10:48:34.800 ffff843261e0 -1 AuthRegistry(0xffff7c081d58) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

[ceph-0][WARNIN] stderr: got monmap epoch 1

[ceph-0][WARNIN] Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQB/605l8419HxAAhIoXMxEJCV5J6qOB8AyHrw==

[ceph-0][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring

[ceph-0][WARNIN] added entity osd.0 auth(key=AQB/605l8419HxAAhIoXMxEJCV5J6qOB8AyHrw==)

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

[ceph-0][WARNIN] Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid c1870346-8e19-4788-b1dd-19bd75d6ec2f --setuser ceph --setgroup ceph

[ceph-0][WARNIN] --> ceph-volume lvm prepare successful for: /dev/vdb

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[ceph-0][WARNIN] Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-837353d8-91ff-4418-bc8f-a655d94049d4/osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f --path /var/lib/ceph/osd/ceph-0 --no-mon-config

[ceph-0][WARNIN] Running command: /usr/bin/ln -snf /dev/ceph-837353d8-91ff-4418-bc8f-a655d94049d4/osd-block-c1870346-8e19-4788-b1dd-19bd75d6ec2f /var/lib/ceph/osd/ceph-0/block

[ceph-0][WARNIN] Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3

[ceph-0][WARNIN] Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[ceph-0][WARNIN] Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-c1870346-8e19-4788-b1dd-19bd75d6ec2f

[ceph-0][WARNIN] stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-c1870346-8e19-4788-b1dd-19bd75d6ec2f.service → /usr/lib/systemd/system/ceph-volume@.service.

[ceph-0][WARNIN] Running command: /usr/bin/systemctl enable --runtime ceph-osd@0

[ceph-0][WARNIN] stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /usr/lib/systemd/system/ceph-osd@.service.

[ceph-0][WARNIN] Running command: /usr/bin/systemctl start ceph-osd@0

[ceph-0][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0

[ceph-0][WARNIN] --> ceph-volume lvm create successful for: /dev/vdb

[ceph-0][INFO ] checking OSD status...

[ceph-0][DEBUG ] find the location of an executable

[ceph-0][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-0 is now ready for osd use.

[root@ceph-0 ceph]# ceph-deploy osd create ceph-1 --data /dev/vdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-1 --data /dev/vdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffff87d9ed20>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-1

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0xffff87e2ded0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdb

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1613) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-1][DEBUG ] connected to host: ceph-1

[ceph-1][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-1][DEBUG ] detect machine type

[ceph-1][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: bclinux 21.10U3 21.10U3 LTS

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-1

[ceph-1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-1][WARNIN] osd keyring does not exist yet, creating one

[ceph-1][DEBUG ] create a keyring file

[ceph-1][DEBUG ] find the location of an executable

[ceph-1][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdb

[ceph-1][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[ceph-1][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 4aa0152e-d817-4583-817b-81ada419624a

[ceph-1][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-89d26557-d392-4a46-8d3d-6904076cd4e0 /dev/vdb

[ceph-1][WARNIN] stdout: Physical volume "/dev/vdb" successfully created.

[ceph-1][WARNIN] stdout: Volume group "ceph-89d26557-d392-4a46-8d3d-6904076cd4e0" successfully created

[ceph-1][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 25599 -n osd-block-4aa0152e-d817-4583-817b-81ada419624a ceph-89d26557-d392-4a46-8d3d-6904076cd4e0

[ceph-1][WARNIN] stdout: Logical volume "osd-block-4aa0152e-d817-4583-817b-81ada419624a" created.

[ceph-1][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[ceph-1][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-1

[ceph-1][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-89d26557-d392-4a46-8d3d-6904076cd4e0/osd-block-4aa0152e-d817-4583-817b-81ada419624a

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-3

[ceph-1][WARNIN] Running command: /bin/ln -s /dev/ceph-89d26557-d392-4a46-8d3d-6904076cd4e0/osd-block-4aa0152e-d817-4583-817b-81ada419624a /var/lib/ceph/osd/ceph-1/block

[ceph-1][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-1/activate.monmap

[ceph-1][WARNIN] stderr: 2023-11-11 10:49:41.805 ffff89d6d1e0 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

[ceph-1][WARNIN] 2023-11-11 10:49:41.805 ffff89d6d1e0 -1 AuthRegistry(0xffff84081d58) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

[ceph-1][WARNIN] stderr: got monmap epoch 1

[ceph-1][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-1/keyring --create-keyring --name osd.1 --add-key AQDC605lWArnLhAAEYhGC+H+Jy224yAIJhL0gA==

[ceph-1][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-1/keyring

[ceph-1][WARNIN] added entity osd.1 auth(key=AQDC605lWArnLhAAEYhGC+H+Jy224yAIJhL0gA==)

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/keyring

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/

[ceph-1][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 1 --monmap /var/lib/ceph/osd/ceph-1/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-1/ --osd-uuid 4aa0152e-d817-4583-817b-81ada419624a --setuser ceph --setgroup ceph

[ceph-1][WARNIN] --> ceph-volume lvm prepare successful for: /dev/vdb

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1

[ceph-1][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-89d26557-d392-4a46-8d3d-6904076cd4e0/osd-block-4aa0152e-d817-4583-817b-81ada419624a --path /var/lib/ceph/osd/ceph-1 --no-mon-config

[ceph-1][WARNIN] Running command: /bin/ln -snf /dev/ceph-89d26557-d392-4a46-8d3d-6904076cd4e0/osd-block-4aa0152e-d817-4583-817b-81ada419624a /var/lib/ceph/osd/ceph-1/block

[ceph-1][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-1/block

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-3

[ceph-1][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1

[ceph-1][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-1-4aa0152e-d817-4583-817b-81ada419624a

[ceph-1][WARNIN] stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-1-4aa0152e-d817-4583-817b-81ada419624a.service → /usr/lib/systemd/system/ceph-volume@.service.

[ceph-1][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@1

[ceph-1][WARNIN] stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@1.service → /usr/lib/systemd/system/ceph-osd@.service.

[ceph-1][WARNIN] Running command: /bin/systemctl start ceph-osd@1

[ceph-1][WARNIN] --> ceph-volume lvm activate successful for osd ID: 1

[ceph-1][WARNIN] --> ceph-volume lvm create successful for: /dev/vdb

[ceph-1][INFO ] checking OSD status...

[ceph-1][DEBUG ] find the location of an executable

[ceph-1][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host ceph-1 is now ready for osd use.

[root@ceph-0 ceph]# ceph-deploy osd create ceph-2 --data /dev/vdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create ceph-2 --data /dev/vdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xffff9a808d20>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : None

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : ceph-2

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0xffff9a897ed0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/vdb

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/vdb

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

dhclient(1626) is already running - exiting. This version of ISC DHCP is based on the release available

on ftp.isc.org. Features have been added and other changes

have been made to the base software release in order to make

it work better with this distribution.Please report issues with this software via:

https://gitee.com/src-openeuler/dhcp/issuesexiting.

[ceph-2][DEBUG ] connected to host: ceph-2

[ceph-2][DEBUG ] detect platform information from remote host

21.10U3 LTS

bclinux

[ceph-2][DEBUG ] detect machine type

[ceph-2][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: bclinux 21.10U3 21.10U3 LTS

[ceph_deploy.osd][DEBUG ] Deploying osd to ceph-2

[ceph-2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-2][WARNIN] osd keyring does not exist yet, creating one

[ceph-2][DEBUG ] create a keyring file

[ceph-2][DEBUG ] find the location of an executable

[ceph-2][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/vdb

[ceph-2][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[ceph-2][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new fe7a2030-94ac-4bbb-af27-7950509b0960

[ceph-2][WARNIN] Running command: /usr/sbin/vgcreate --force --yes ceph-8d4ef242-6dc1-4161-9b6a-15a626b86c6f /dev/vdb

[ceph-2][WARNIN] stdout: Physical volume "/dev/vdb" successfully created.

[ceph-2][WARNIN] stdout: Volume group "ceph-8d4ef242-6dc1-4161-9b6a-15a626b86c6f" successfully created